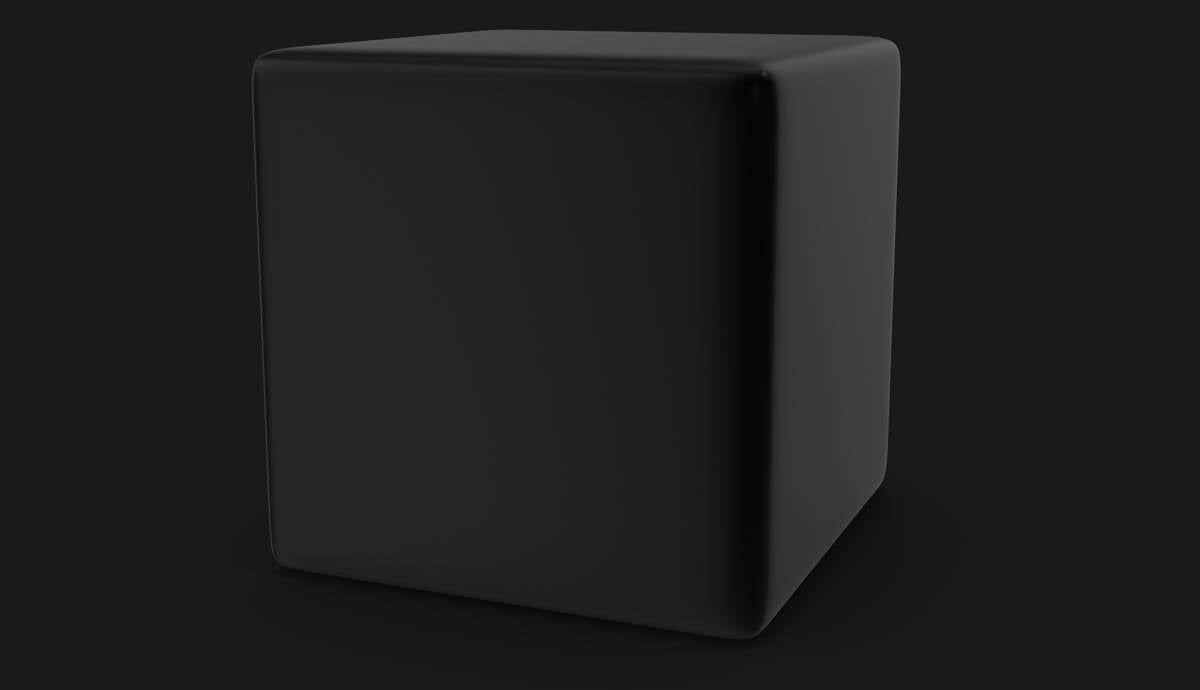

Shadowbanning, Muting, Unboosting and De-Ranking are technology tactics social media companies use to silently control speech without letting their users know they’re being censored. Say something controversial or ask the wrong question and your voice can get dialed down without you knowing it. Unlike getting punished with a stint in “Facebook Jail”, hidden algorithms can make sure nobody sees what your posting. It’s futile to try to change it or fight it – free speech should never be expected on platforms that you can’t control, where control over the content’s exposure is controlled from within a black box.

Free speech is a value Americans have recognized and cherished as a principal that defines what America is. There is, however, a big difference between the written law of the First Amendment, and the rights of Big Tech companies to control content on their properties. The First Amendment is only written to limit government, not private companies.

The Bait and Switch

Let’s go back to the early days of social media, before Silicon Valley performed a bait-and-switch with free speech. When Facebook and Twitter first started you’d see all the content shared by people you’re connected to. When a business posted content it would be shown to the whole audience who Followed or Liked the business. It didn’t take long for this to change. The rules were changed to limit whose content gets shown. Businesses who spent effort and money getting fans, so their loyal customers would see their posts, discovered they’d need to spend extra money if their fan base was going to be shown the content. Free speech was turned into “expensive speech” for businesses expecting social media to connect with audiences.

Let’s go back to the early days of social media, before Silicon Valley performed a bait-and-switch with free speech. When Facebook and Twitter first started you’d see all the content shared by people you’re connected to. When a business posted content it would be shown to the whole audience who Followed or Liked the business. It didn’t take long for this to change. The rules were changed to limit whose content gets shown. Businesses who spent effort and money getting fans, so their loyal customers would see their posts, discovered they’d need to spend extra money if their fan base was going to be shown the content. Free speech was turned into “expensive speech” for businesses expecting social media to connect with audiences.

The Bait

For a while, before that happened, you’d see businesses putting stickers in their windows, promoting Facebook in their brick and mortar locations. “Follow us on Facebook” they’d ask, because it had a direct benefit to the business if a customer connected to them through a Facebook Page.

The Switch

But now you don’t really see that any more. Hardly anyone is excited about promoting Facebook with stickers and calls-to-action displayed in windows and next to cash registers to the same degree business owners used to. Businesses wised up – getting a following on Facebook wasn’t what it used to be. What was valuable had been made much less valuable by the change in rules – the switch in the algorithm.

But now you don’t really see that any more. Hardly anyone is excited about promoting Facebook with stickers and calls-to-action displayed in windows and next to cash registers to the same degree business owners used to. Businesses wised up – getting a following on Facebook wasn’t what it used to be. What was valuable had been made much less valuable by the change in rules – the switch in the algorithm.

Now We’re Trapped

What was “free” now costs a lot, and hardly works like it claims it’s going to. For example, Facebook might tell you that it costs $15 to reach 2000 people, but if you spend that $15 dollars you might discover the post was only seen by 200 people. Sponsoring a post marks it with “Sponsored” so the audience knows it’s an ad. The bait-and-switch, the unimpressive delivery relative to promises, and the markup with “Sponsored” all make running ads on Facebook less than appealing compared to the days when Facebook first started. I’ve even caught Facebook doing some sneaky stuff with my ad dollars, stuff that’s clearly dishonest to ad buyers.

What was “free” now costs a lot, and hardly works like it claims it’s going to. For example, Facebook might tell you that it costs $15 to reach 2000 people, but if you spend that $15 dollars you might discover the post was only seen by 200 people. Sponsoring a post marks it with “Sponsored” so the audience knows it’s an ad. The bait-and-switch, the unimpressive delivery relative to promises, and the markup with “Sponsored” all make running ads on Facebook less than appealing compared to the days when Facebook first started. I’ve even caught Facebook doing some sneaky stuff with my ad dollars, stuff that’s clearly dishonest to ad buyers.

The Black Box

Casting money into a black box isn’t appealing when what comes out isn’t worth much. Many ad buyers probably have more chances of getting a better return on their money in a slot machine. Slot machines are subject to being audited by Gaming Commissions – social media isn’t audited at all. Slot machines are subject to regulations – social media still isn’t.

Casting money into a black box isn’t appealing when what comes out isn’t worth much. Many ad buyers probably have more chances of getting a better return on their money in a slot machine. Slot machines are subject to being audited by Gaming Commissions – social media isn’t audited at all. Slot machines are subject to regulations – social media still isn’t.

Some Censorship Is Good and Not Against Free Speech

Most comments submitted to this website are removed. This isn’t to abridge anyone’s free speech. This censorship is done to remove automated “spam” comments made constantly by bots. These bots submit comments 24/7 that are easily recognized by me as unwanted abuses of the feature that allows commenting. The comments that are removed are mostly comments from bots posting “spam ads” for pharmaceuticals, retail products and web services. Sometimes the bots just post nonsense for SEO, to get an inbound link from this site to theirs. Less than one out of a hundred comments are from legitimate readers like yourself. Legitimate comments are very welcome, but spammy comments are not. As the publication owner I am most certainly abridging the “free speech” of unscrupulous, annoying spammers who are looking to sell their products and services, or simply get “backlinks” to their own sites for SEO benefits.

This kind of restriction I’m exercising, as the owner of the publication/platform doesn’t have the intent of abridging sincere people’s sentiment – it’s reasonably done to keep the quality of the content published here from suffering. If I don’t want my articles shown with a slew of porn links at the end, that’s my right. I’m not doing it to secretly influence people’s choices and awareness about social issues. I’m exercising my right to select and censor comments that are spammy and inappropriate to maintain a level of quality, to maintain the “tone” I reserve the right to keep as the publisher.

My right to remove untoward comments is a form of free speech I should have as a publisher. As a publisher I should have a right to decide what gets published through my online properties, even if it is added via a feature, comments, that makes my publication behave like a platform.

Technology companies, owners of websites, their sponsors and investors should retain a legal right to censor and moderate the content contributions of their audiences and end-users, regardless of whether the technology is defined as being a platform and publication.

How a Platform Differs from a Publication

Examining something that’s more clearly a platform, for example telephone conversations, or even direct messaging applications, draws a clear distinction between what defines a platform as separate from a publication. Both contain and facilitate content being exposed and exchanged between audiences or end users. However, there’s an expectation of privacy in a pure platform where the end-users have a reasonable expectation that their content won’t be exposed to the public-at-large.

The difference between this website and Facebook is who is providing the content. Here, I write the articles. This is where I post my content. On Facebook, however, who is using it because they care most about what Mark Zuckerberg is saying? Most people use Facebook because it allows them to post their content so friends can see it. Most people use Facebook to see what their friends posted. It’s user-contributed content that defines Facebook and Twitter’s value, not the content created by its publishers. That’s the biggest, most important distinction between a platform and a publication.

The implied expectation on platforms, for end users who are contributing content, is that their content will be shown, not hidden. Equity is expected – it’s implied. If I’m posting content on a social media platform I expect it to be shown to the equal degree other people’s content is shown. We expect platforms to be impartial to what we post. However, that’s not how it works. The degree to which content is shown is governed by algorithms.

It’s important to understand how Big Tech companies exercise their rights on a technical level. Does the technology need to be regulated in a better way, without sacrificing the rights of either technology owners or contradicting the expectations of technology end-users?

Platforms publicly justify their algorithms having control over who sees content for virtuous reasons, both for the benefit of users and for the benefit of advertisers. The rationale is to show people “what they like or are likely to like”. For example, if my wife engages mostly on posts that are about travel or nature a platform will profile her and show her posts that have to do with travel or nature. If I click and comment more on posts that have to do with politics I’ll see more content that is about politics. She’ll see less about politics compared to me and I’ll see less about travel and nature compared to her. This is good, however it doesn’t create a situation where platform publishers have something to hide.

Showing people what they like the most keeps users on the platform for more time, and creates more value for advertisers. Although social media publishers have the right to keep algorithms that do this hidden from the general public, behavior of a platform doing this kind of selective exposure isn’t something that many end-users would find objectionable.

There is another kind of selective exposure that most end-users don’t know about, and may feel is unfair. This is an invisible kind of exposure.

Dialed Back Inside the Black Box

The same algorithms that dialed back unpaid content on social media doesn’t just impact businesses. It also impacts average people’s ability have content exposed. Facebook’s “News Feed” started out showing users virtually everything Friends posted. It doesn’t work that way any more. Now, inside a black box, using rules and factors that are hidden from end-users like you and me, social media platforms select what we get to see. If a social media platform’s owners want nobody to see your posts, they can dial your account back so your posts hardly get shown to anyone. The mechanism of squelching your content differs slightly on each platform, but the end result is the same: no matter how many Friends and Followers you have, only a tiny segment of them will see what you posted.

Starved on the Street Instead of Being Put in Jail

The insidious part about being dialed down on social media is that there’s no definitive indicator that it’s happened to your account. Unlike having a post censored, removed or being told you’re being punished for breaking rules, being silenced happens silently. The only real way of knowing it’s occurred is to observe the change in exposure empirically. Yesterday’s posts may have been exposed to hundreds of people, but today’s aren’t shown to twenty.

There’s been debate whether tech companies have the right to censor, moderate or squelch free speech in the form of content contributions by their users. Some of this debate has focused on the difference between “platforms” versus “publications”. This website, ClickWhisperer.com, clearly fits within the definition of publication.

However, if you look below this article, there’s a feature where you, the reader, can comment on what you’re reading here. So this website isn’t purely just a publication. Because of its ability for readers to comment it could be also thought of as a platform. A publication that does not show comments from readers, however, is purely a publication.

Unboosting on Facebook

Facebook lets businesses “boost” a post by paying. There’s also an opposite factor, governed by Facebook employees, contractors and fact-checking partners. It’s called “Unboosting”. If you, as a person or as a business, are getting Unboosted that means your posts are being shown to less people.

It happened to me, on a Page I maintain called 4boca. This Page is a social media presence for a local blog I publish. Before my transgressions, while the Page was squeaky clean, I came under Facebook’s scrutiny. I had published a poll on the 4boca.com website. The poll questions included State and Federal elections. This made Facebook ask me a series of question and go through a process of validating my identity and the identity of my business. None of my other Pages had ever triggered inspection by Facebook on that level before. For about a year after that I was using the Page weekly, slowly gaining Page Fans.

Then recently, I was using a technology I developed called RSS NEWS AGGREGATOR, posting the news links I curated on the site to the Facebook Page. One of the news stories I shared was from website run by a group of American physicians who were asking important questions about the coronavirus pandemic of 2020.

A Fact-Checking organization disagreed with these physicians, and felt it wasn’t appropriate to ask questions of this nature. Simply sharing the new post caused Facebook to notify me that I had shared “misinformation” around the pandemic. The message explained how, because the Page had linked to the website containing that article, “Future Posts will be shown to a smaller audience”.

That is Unboosting. Now that Facebook Page is banned from being able to Boost, or sponsor, posts. I appealed it, and although the news wasn’t my sentiment or words personally, the Page is still around, but the fan count doesn’t increase like it used it. No big loss… perhaps.

Muting on Twitter

I know someone who worked at Twitter. He told me about “Muting” and “Mute Words”. If you say the wrong thing, use the wrong terms, your Twitter account is going to be scored as one that should be exposed less. It works the same as Facebook.

Twitter plays favorites in the same way. What Twitter does have, that Facebook isn’t openly blatant about, is giving certain people blue checks, making them “Verified” accounts. Although Twitter does publish criteria for who gets a blue check or not, following the guidelines doesn’t guarantee getting a blue check.

Twitter admits it will take away an account’s Verification when it detects the following: Hateful conduct, abusive behavior, glorification of violence, civil integrity policy, private information policy, political manipulation and spam policy. So basically nobody can talk about anything of import, and what’s allowed or not allowed is subject to interpretation.

If you were never Verified in the first place, never got a blue check, you can bet those same criteria will be applied against your account, silently diminishing the secret score used against you, hidden inside Twitter’s black box.

Shadowbanning on YouTube

A lot of YouTubers complain about being mistreated by YouTube in spite of the fact that I end up seeing them complaining about it. They complain about being demonitized, where YouTube stops running ads and stops paying them. YouTubers also complain about being “Shadowbanned”. Essentially they’re complaining about the algorithm, and how they noticed that the views they were once getting have dropped, consistently, across the board.

For example they’d be getting 150 new followers a day, and 70,000 views per day – but then all of a sudden their new followers drop to 25 per day and they get 15,000 views. They’re still getting something, but it wasn’t what it was. They’re not censored or cut off completely, but things aren’t like they were. They describe the phenomenon as “Shadowbanning”. They’re banned, but not really, just diminished.

Of course the number of views and new followers a YouTuber gets is driven by their algorithm. It’s still just called “The Algorithm” by the online marketing industry. It doesn’t have a unique name like Facebook’s EdgeRank. YouTube’s algorithm makes recommendations for what gets seen 70% of the time, when viewers watch a video that follows one they requested. It determines what plays next. If the algorithm likes your video it gets shown to new audiences. If it doesn’t then tough luck – make another one and see how it does. If the algorithm is biased against you, over and above your content, then you might find yourself getting less views than someone else would if they had published the same content.